Automatically test your Web Messenger Deployments

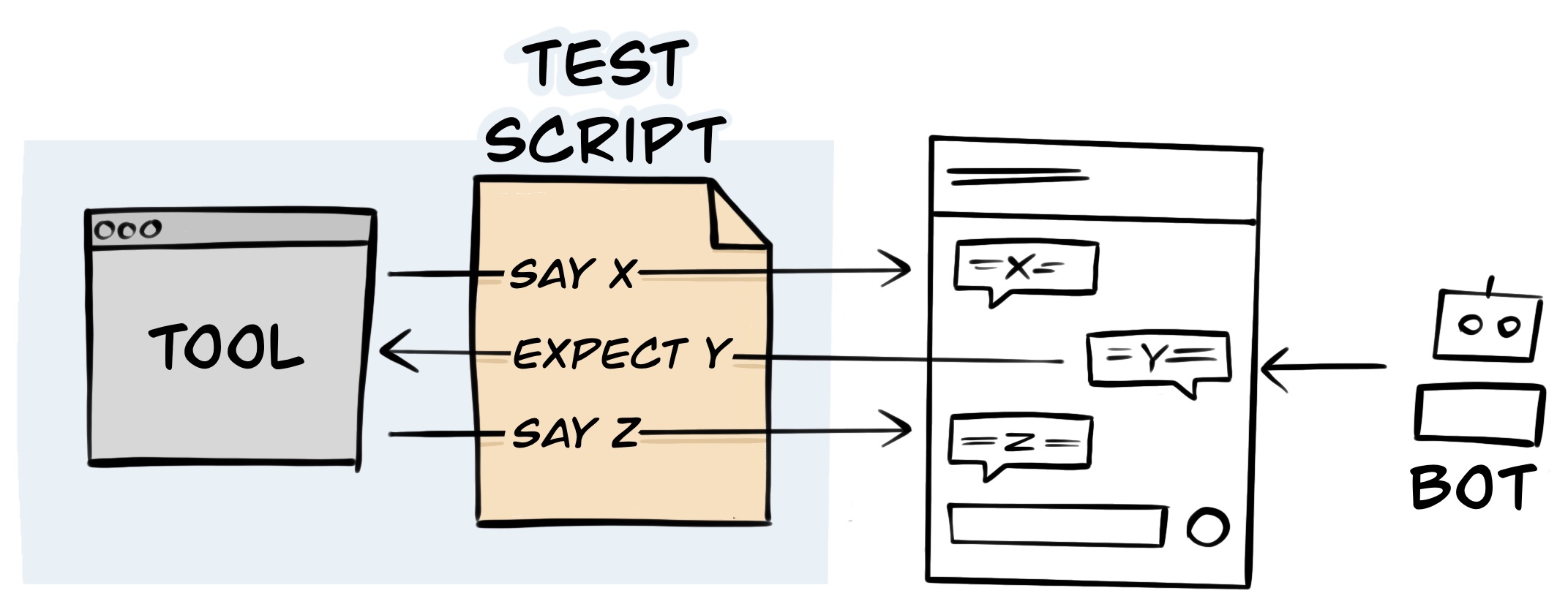

Allows behaviour for Genesys Chatbots and Architect flows behind Genesys’ Web Messenger Deployments to be automatically tested using:

- Scripted Dialogue - I say “X” and expect “Y” in response

- Generative AI - Converse with my chatbot and fail the test if it doesn’t do “X”

Why? Well it makes testing:

- Fast - spot problems with your chatbots sooner than manually testing

- Repeatable - scenarios in scripted dialogues are run exactly as defined. Any response that deviates is flagged

- Customer focused - expected behaviour can be defined as scenarios before development commences

- Automatic - being a CLI tool means it can be integrated into your CI/CD pipeline, or run on a scheduled basis e.g. to monitor production

The above test is using the dialogue script:

config:

deploymentId: xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx

region: xxxx.pure.cloud

scenarios:

"Accept Survey":

- say: hi

- waitForReplyContaining: Can we ask you some questions about your experience today?

- say: Yes

- waitForReplyMatching: Thank you! Now for the next question[\.]+

"Decline Survey":

- say: hi

- waitForReplyContaining: Can we ask you some questions about your experience today?

- say: No

- waitForReplyContaining: Maybe next time. Goodbye

"Provide Incorrect Answer to Survey Question":

- say: hi

- waitForReplyContaining: Can we ask you some questions about your experience today?

- say: Not sure

- waitForReplyContaining: Sorry I didn't understand you. Please answer with either 'yes' or 'no'

- waitForReplyContaining: Can we ask you some questions about your experience today?

How it works

The tool uses Web Messenger’s guest API to simulate a customer talking to a Web Messenger Deployment. Once the tool starts an interaction it follows instructions defined in a file called a ‘test-script’, which tells it what to say and what it should expect in response. If the response deviates from the test-script then the tool flags the test as a failure, otherwise the test passes.